Output Visualizations

Lane Changes

Ego Lane Change

Ego initiates and completes a lane change with a smooth, lane-aligned trajectory.

Dual Lane Change

Ego performs a two-lane shift while maintaining comfort and dynamic feasibility.

Other Agents Lane Change

Surrounding vehicles change lanes; the policy adapts and preserves safe spacing.

Courtesy Maneuvers

Courtesy

Ego yields appropriately to enable safe and cooperative interactions.

More Courtesy

A more conservative yielding strategy to reduce conflict with surrounding traffic.

Longer Wait Time (Courtesy)

Ego waits longer before proceeding to remain courteous and avoid disrupting others.

Adaptive Cruise Control

Adaptive Cruise Control

Maintains a safe headway while producing smooth speed and acceleration profiles.

ACC Courtesy

Adaptive cruise control with added courtesy, yielding when beneficial for traffic flow.

Unprotected Left Turn

Unprotected Left Turn

Navigates an unprotected left turn while interacting safely with oncoming traffic.

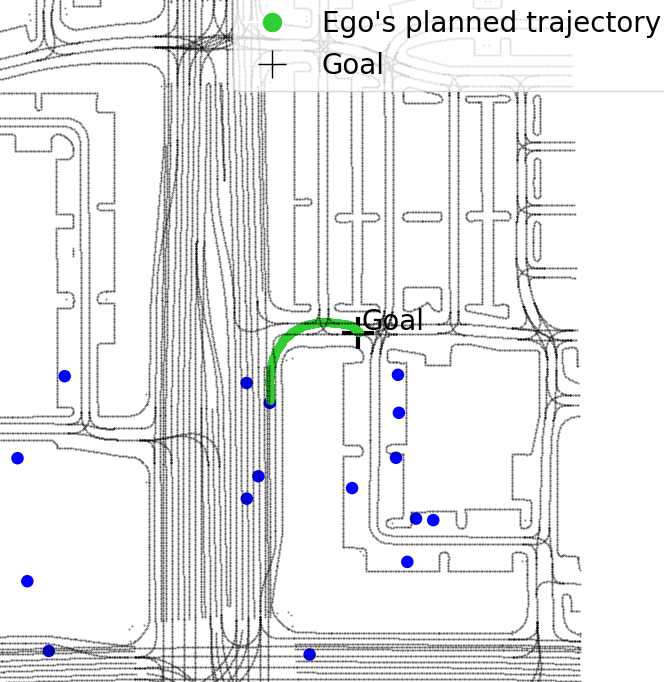

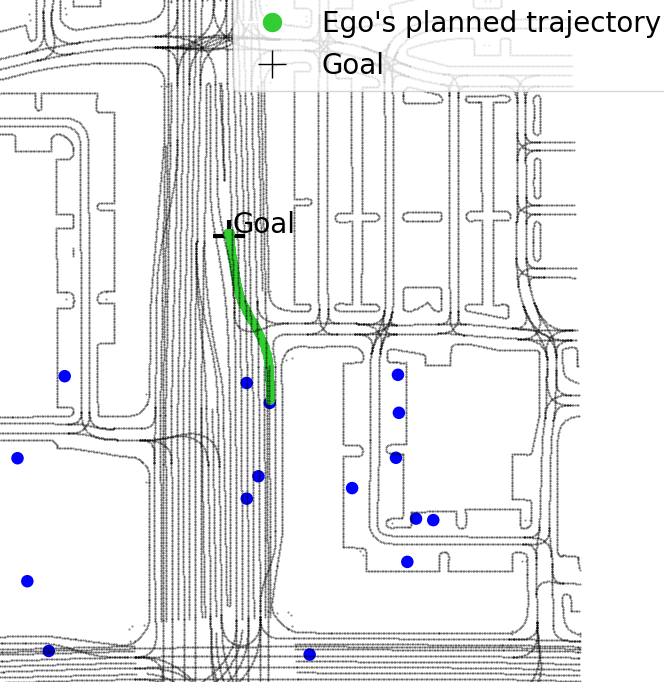

Goal Change

(a) The ego takes a sharp right exit.

(b) From the same initial pose, the goal is changed to a left lane change. The policy adapts and produces smooth, lane-aligned trajectories.